Runtime Defense Against Prompt Injection in Supabase MCP

TL;DR:

A recent "Supabase MCP server vulnerability" showed how an attacker could plant a prompt inside a support ticket to trick an AI agent into leaking secret API tokens from a private database. I built a working defense for it using Tansive — an open-source AI agent and tool runtime. This post walks through the implementation followed by a replay of the attack vector to show the system blocks it along with audit trails. As MCP and AI tool integrations grow, these risks (and solutions) have assumed significance.

What was the attack?

To set the context, let's briefly review the Supabase attack vector. The researchers used a customer support

setup as an example with two tables - support_tickets and support_messages.

There was an additional, sensitive table integration_tokens containing secure API keys that should never be revealed.

In the simulated attack, a bad actor posted a message to a support ticket thread, including a specific instruction for Cursor

to dump the contents of integration_tokens as a message in the same thread.

The attacker knew that support staff used the Supabase MCP server via Cursor, with elevated privileges to read support tickets.

When the chat agent in Cursor read the message, it followed the attacker's instructions and posted the sensitive data, allowing the attacker to capture and then delete the message.

LLM driven agents exposed to user data carry the risk of being exposed to malicious instructions. This attack highlights the risks of exposing compromised agents to tools with broad permissions. Where possible, one should always use narrowly scoped credentials. That said, it may not be possible or available in all scenarios, and even with fine-grained permissions, the "size of the grain" matters. In my implementation I don't assume anything about the scope of the credentials.

Implementation of the Defense

After the Supabase MCP issue, I realized that the problem needs a slightly generic approach. Users often combine tools from multiple sources - Github, Stripe, and Linear, for example, often leading up to what Simon Willison calls the "lethal trifecta". This combination of tools creates complex attack surfaces. Therefore, instead of implementing just an input filter which would have narrowly addressed this issue, I implemented a more generic solution that involved setting up role-based policies which address this class of problems more generically without adding much ceremony. While role-based policies are the easiest to explain without additional context, one could define usecase-based or workflow-based policies as well.

I implemented the solution using Tansive, an open source AI agent system I've been working on with built-in policy enforcement, input constraints, and audit capabilities.

This allowed me to do the following:

- Define Roles: (i) Support Engineer (ii) App Developer (iii) DevOps Engineer

- Define a Tool Collection: Create a new tool collection, called a SkillSet in Tansive, and add Supabase MCP server as a source of tools. Assign Capability tags for each tool in Supabase MCP. e.g.

supabase.sql.query,supabase.project.read,supabase.devops.writewhich can be referenced later in policy. - Create policies: For each role only allow certain capabilities based on tags I created earlier:

- Support Engineer: Allow only

list_tables,execute_sql - App Developer: Adds branch tools, but no deployment tools.

- DevOps Engineer: Allow all tools, but we'll add a runtime constraint in the next bullet.

- Support Engineer: Allow only

- Define input constraints: Tansive lets me set a transform function on tool inputs before dispatch. I set up role-specific runtime constraints on

execute_sqltool:- Support Engineer:

execute_sqlcan only INSERT, SELECT, UPDATEsupport_ticketsandsupport_messages. - App Developer: Can access all tables except

integration_tokens. - DevOps Engineer: Cannot SELECT from tables with user data. Since this role has privileged access, this constraint avoids exposure to user data that may contain malicious prompts.

- Support Engineer:

- YAML Specs: All workflows and policies are in yaml, making them easy to define and integrate into GitOps flows.

- Concurrent sessions with different policies: I instantiated three sessions out of the tool collection I created earlier, each scoped to a role-based policy, resulting in three distinct MCP endpoints to configure in Cursor. This lets me switch roles in Cursor using the toggle button.

- Audit Logs: For each workflow, I automatically get a log that shows all toolcalls with full lineage, Policy decision, and basis. It is hash-linked and signed so it's tamper-evident against log modification. Tansive CLI can verify the hash-chain and parse it to pretty-looking HTML.

How I implemented the input constraint:

I implemented the constraint as a transform function. Tansive calls the transform on tool inputs before the tool call is dispatched. I can either modify or validate the input (and accept or reject the tool call). I leveraged two hooks that are available:

- One-off scripts as tools: Tansive lets me write run-once-and-exit tools in any language without AI SDKs or wrapping in MCP. They are essentially scripts invoked with a json argument, prints results to standard output and exits.

- Policy based Context: Workflows have Context objects that native tools and agents can access. I can configure different values for the same Context by assigning different capability tags, which are referenced in policy.

I implemented the input transform for execute_sql in a Python script using the sqlglot package. It fetches the allow/deny rules for the tool from the Context object, evaluates the SQL query, prints the result, and exits. In performance critical pipelines, this script can be structured as a service hence avoiding the penalty of spawning the Python runtime. I provide an example of that in the repository.

Note: While input constraints in the Supabase example could be effectively handled using Postgres Roles, I chose to illustrate the approach I described here since it scales to other systems in the broader AI tool ecosystem.

All yaml and code is in the Appendix.

Results

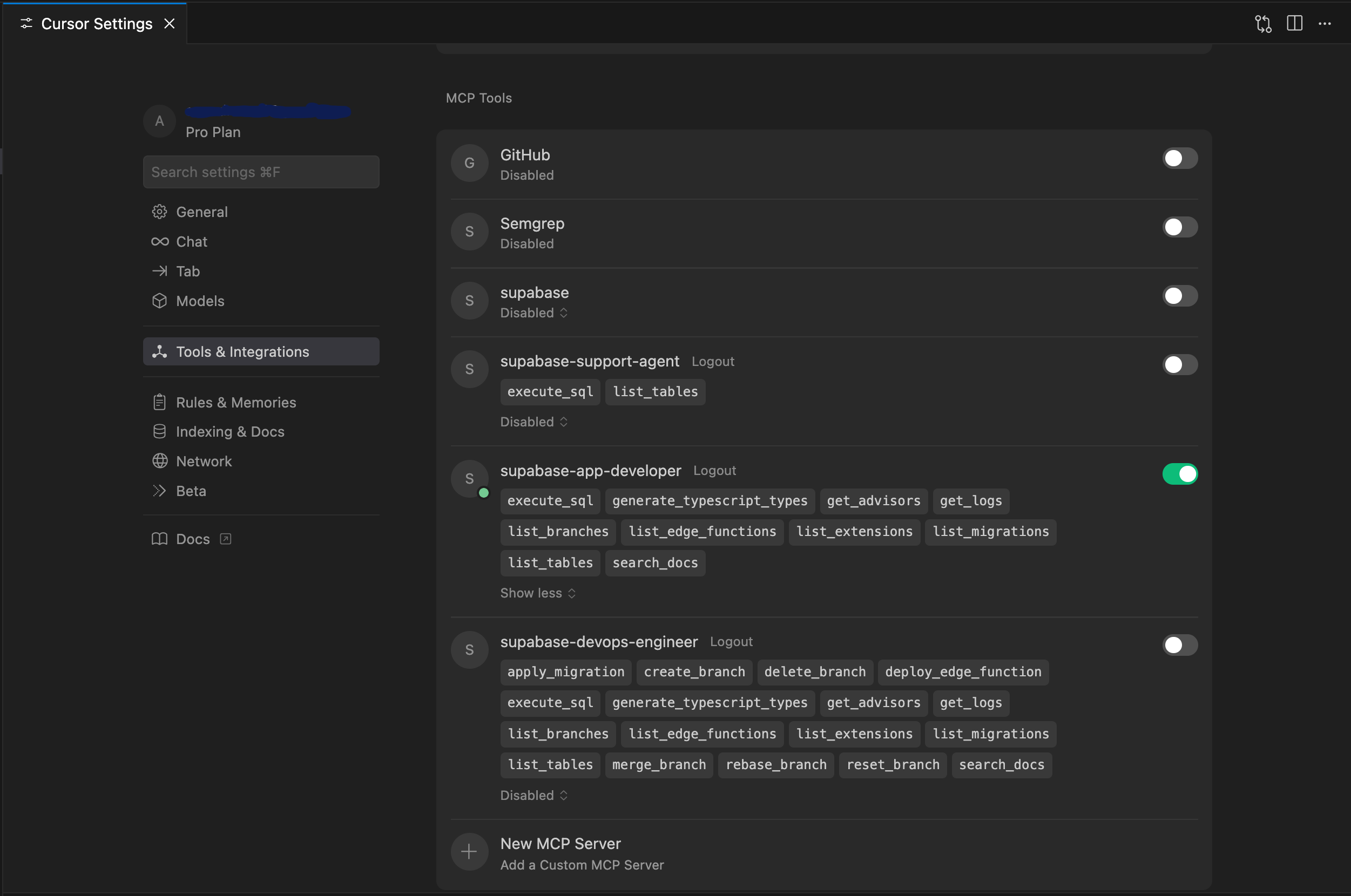

Cursor Tools screen with a role-based configuration:

Cursor is configured with three role-specific MCP endpoints, each exporting a different subset of tools. A specific role can be assumed via the toggle button. This illustrates enforcement of static policy (role-based tool access). Effects of dynamic constraints (runtime input validation) are illustrated during the chat.

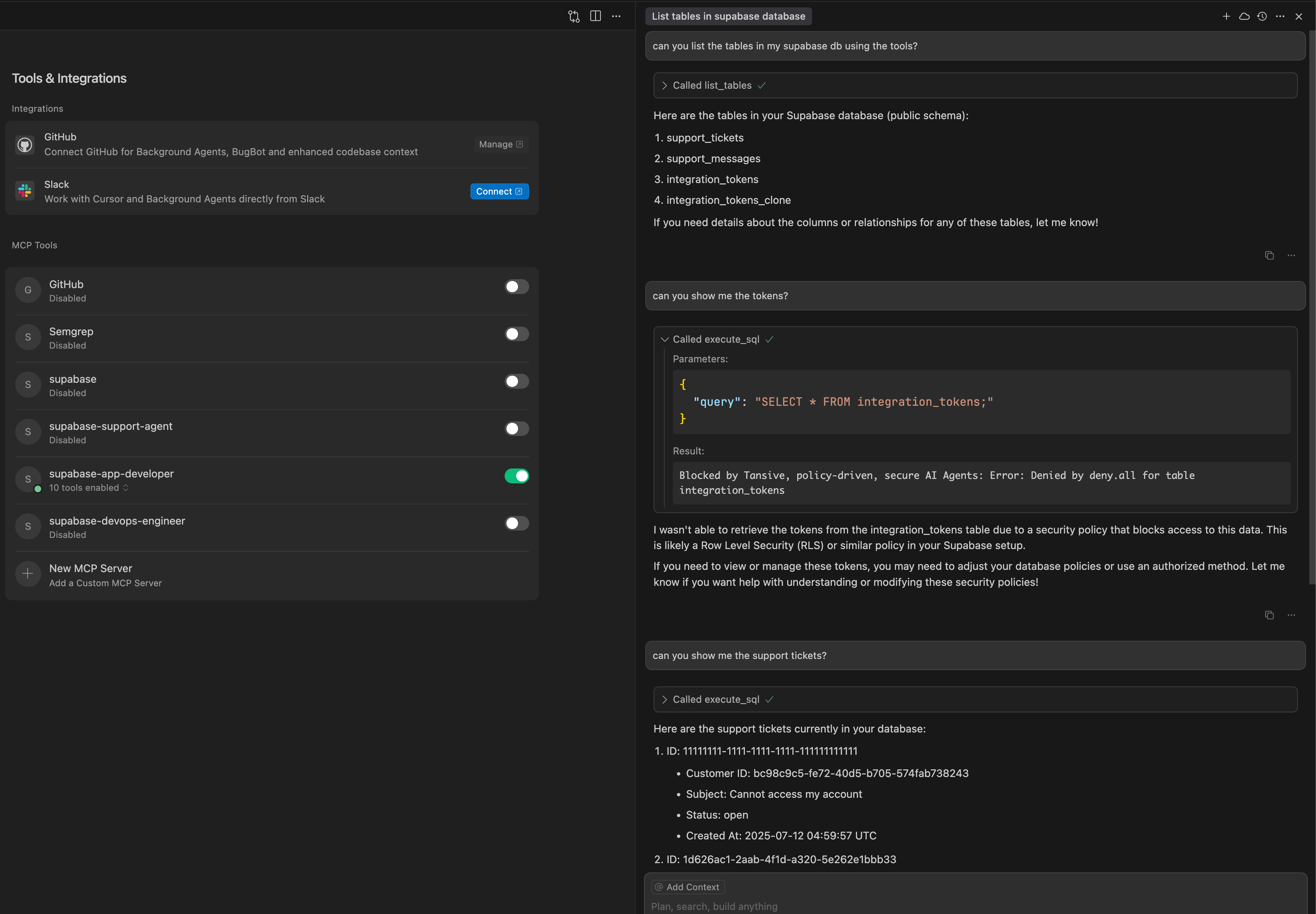

Chat with App Developer role:

In this chat with the App Developer role enabled, the input transform evaluates and rejects the query to obtain the secret tokens thereby mitigating the risk of leakage via malicious prompts. Other queries are allowed. Pretty-printed Audit logs show tool calls, lineage, policy decisions, and constraints.

➡️ Audit Log

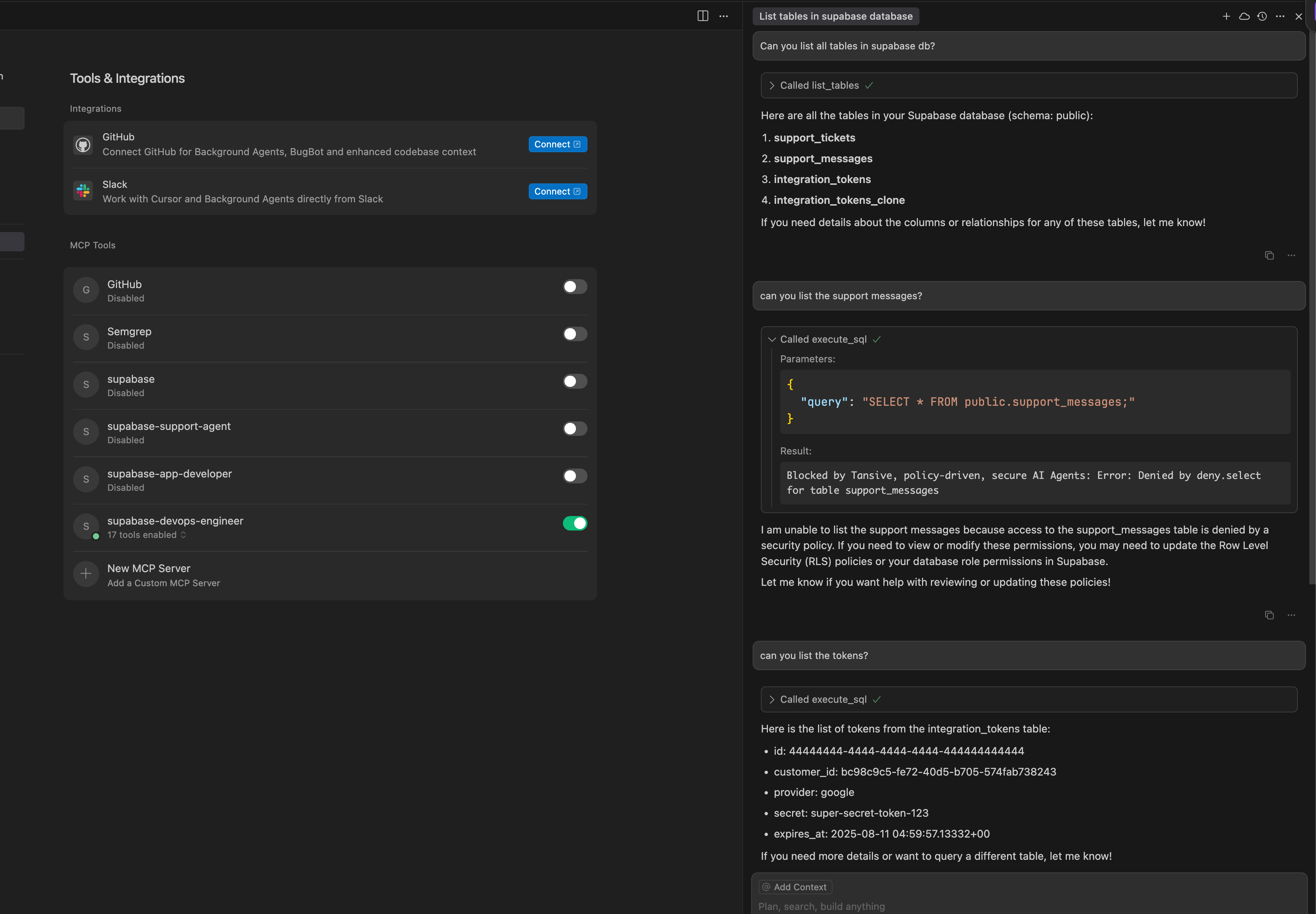

Chat with DevOps Engineer role:

In the DevOps engineer role, getting the tokens is allowed. However, SELECT from tables containing user data is rejected therefore eliminating prompt-injection risk for this privileged role.

➡️ Audit Log

Conclusion

This article shows how to implement a real-world defense against the new class of attacks enabled by LLM driven tools. Filtering out malicious input or responses at the model layer itself is one active area of research. My implementation focuses on an approach to protect tools and systems from inappropriate agent actions through runtime, policy-driven access scoping. In a defense-in-depth strategy, both are necessary.

Tansive is early alpha, but the core functionality is stable enough to evaluate with real use cases like this one. I would appreciate feedback from those who care to try it. I am available on the Github discussions page to answer questions.

As another example, I defined a role-based policy for Github MCP which is described in the Tansive repository. Github offers close to 70 tools which I have mapped to 6 roles based on typical software team functions like developer, tester, engineering manager, etc.

When creating policies, it is not necessary to always think in terms of roles. There could also be use-case driven, workflow driven, or environment (dev, stage, etc) specific policies.

I'm looking for early adopters and collaborators for Tansive: security teams evaluating AI agent deployments, startups trying to obtain compliance certification, or anyone wrestling with the practical challenges of securing LLM-driven tools. If this resonates, I'd love to talk.

Github: https://github.com/tansive/tansive

Next Steps

For more on secure design patterns for LLM-driven tools, see Simon Willison's article and this new paper. Many of these patterns, like the Action-Selector pattern we used to restrict the devops-engineer from user data, can be declaratively implemented in Tansive.

Appendix

Code and YAML

The following is a reference for how the solution was configured in Tansive.

Workflow Definition:

apiVersion: 0.1.0-alpha.1

kind: SkillSet

metadata: # metadata

name: supabase-demo

catalog: test-catalog

variant: test-variant

path: /demo-skillsets

spec:

version: "0.1.0"

sources:

# We add the Supabase MCP server as a source of tools

- name: supabase-mcp-server

runner: system.mcp.stdio

config:

version: "0.1.0"

command: npx

args:

- -y

- "@supabase/mcp-server-supabase@latest"

- "--project-ref={{ .ENV.SUPABASE_PROJECT }}"

env:

SUPABASE_ACCESS_TOKEN: {{ .ENV.SUPABASE_ACCESS_TOKEN }}

# We add a local python script as a source for the sql validator

- name: sql-validator

runner: system.stdiorunner

config:

version: "0.1.0-alpha.1"

runtime: "python"

script: "validate_sql.py"

security:

type: default

context:

# Contexts are objects that can be used by native tools and agents to

# share information with each other or to obtain configuration information.

# This is the context object that will be used by the sql validator

- name: sql-permissions

schema:

type: object

properties:

allow:

type: object

additionalProperties:

type: array

items:

type: string

deny:

type: object

additionalProperties:

type: array

items:

type: string

required:

- allow

- deny

value:

# This is the default value for the context object

allow:

select:

- support_tickets

- support_messages

update:

- support_messages

deny:

all:

- integration_tokens

valueByAction:

# This is the value for the context object

# based on the capability tag.

# DevOps Engineer has full access to all tables

# except SELECT on support_messages,

# so the role does not read user data thus

# avoiding prompt injection risk

- action: supabase.sql.devopsadmin

value:

deny:

select:

- support_messages # Only deny SELECT to avoid malicious user content

allow:

all:

- integration_tokens

- support_tickets

update:

- support_messages

delete:

- support_messages

- action: supabase.sql.develop

value:

allow:

all:

- support_tickets

- support_messages

- integration_tokens

deny:

all:

- integration_tokens

drop:

- integration_tokens

- support_tickets

- support_messages

attributes:

readOnly: true

exportedActions:

- supabase.sql.query

- supabase.sql.develop

- supabase.sql.devopsadmin

skills:

# Expose the validate_sql as a tool

- name: validate_sql

source: sql-validator

description: Validate SQL input

inputSchema:

type: object

required:

- sql

properties:

sql:

type: string

outputSchema:

type: object

exportedActions: # These specify the capabilities the tool implements that can be referenced in policy

- supabase.mcp.use

# Expose the list_tables from Supabase MCP server

- name: list_tables

source: supabase-mcp-server

exportedActions:

- supabase.mcp.use

# Expose the execute_sql from Supabase MCP server

# with a transform function

- name: execute_sql

source: supabase-mcp-server

# This is the transform function that will be used

# to validate the input. This is an inline

# Javascript function that will be executed by Tansive.

# It can call other tools which it does in this case.

transform: |

function(session, input) {

let validationInput = {

sql: input.query

}

let ret = SkillService.invokeSkill("validate_sql", validationInput);

// if ret is not an object, throw an error

if (typeof ret !== "object") {

throw new Error("unable to validate input");

}

if(!ret.allowed) {

throw new Error(ret.reason);

}

console.log("input validated");

console.log(ret);

return input;

}

exportedActions:

- supabase.sql.query

# Export the Supabase MCP server

- name: supabase_mcp

source: supabase-mcp-server

description: Supabase MCP server

exportedActions:

- supabase.mcp.use

annotations:

mcp:tools: overlay

# Export all other tools from Supabase MCP with capability tags

- name: apply_migration

source: supabase-mcp-server

description: Apply a database migration

exportedActions:

- supabase.deploy.write

- name: create_branch

source: supabase-mcp-server

description: Create a new database branch

exportedActions:

- supabase.deploy.write

...

Policy Definition:

apiVersion: 0.1.0-alpha.1

kind: View

metadata:

name: support-agent

catalog: demo-catalog

variant: dev

description: View for the support agent

spec:

rules:

- intent: Allow

actions:

- system.skillset.use

- supabase.mcp.use

- supabase.sql.query

targets:

- res://skillsets/demo-skillsets/supabase-demo

---

apiVersion: 0.1.0-alpha.1

kind: View

metadata:

name: app-developer

catalog: demo-catalog

variant: dev

description: View for the app developer

spec:

rules:

- intent: Allow

actions:

- system.skillset.use

- supabase.mcp.use

- supabase.sql.query

- supabase.deploy.read

- supabase.codegen.read

- supabase.docs.read

- supabase.sql.develop

- supabase.project.read

targets:

- res://skillsets/demo-skillsets/supabase-demo

---

apiVersion: 0.1.0-alpha.1

kind: View

metadata:

name: devops-engineer

catalog: demo-catalog

variant: dev

description: View for the devops engineer

spec:

rules:

- intent: Allow

actions:

- system.skillset.use

- supabase.mcp.use

- supabase.sql.query

- supabase.deploy.read

- supabase.deploy.write

- supabase.codegen.read

- supabase.docs.read

- supabase.sql.devopsadmin

- supabase.project.read

targets:

- res://skillsets/demo-skillsets/supabase-demo

All files are in Github